Eye tracking has become an essential human-machine interaction modality for providing immersive experience in numerous virtual and augmented reality (VR/AR) applications desiring high throughput (e.g., 240 FPS), small-form, and enhanced visual privacy.

However, existing eye tracking systems are still limited by their: (1) large form-factor largely due to the adopted bulky lens-based cameras; and (2) high communication cost required between the camera and backend processor, thus prohibiting their more extensive applications.

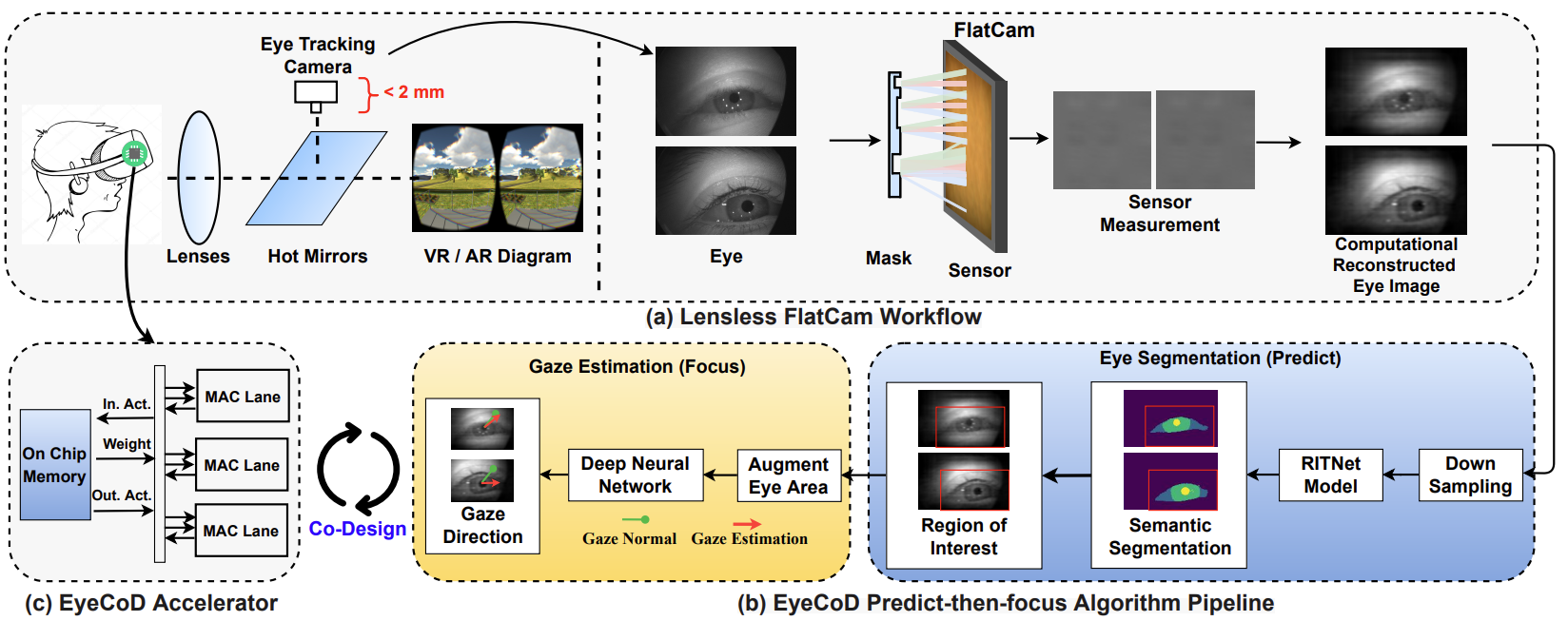

To this end, we propose a lensless FlatCam-based eye tracking algorithm and accelerator co-design framework dubbed EyeCoD to enable eye tracking systems with a much reduced form-factor and boosted system efficiency without sacrificing the tracking accuracy, paving the way for next-generation eye tracking solutions. On the system level, we advocate the use of lensless FlatCams to facilitate the small form-factor need in mobile eye tracking systems. On the algorithm level, EyeCoD integrates a predict-then-focus pipeline that first predicts the region-of-interest (ROI) via segmentation and then only focuses on the ROI parts to estimate gaze directions, greatly reducing redundant computations and data movements. On the hardware level, we further develop a dedicated accelerator that (1) integrates a novel workload orchestration between the aforementioned segmentation and gaze estimation models, (2) leverages intra-channel reuse opportunities for depth-wise layers, and (3) utilizes input feature-wise partition to save activation memory size.

Extensive experiments validate that our EyeCoD consistently reduces both the communication and computation costs, leading to an overall system speedup of 10.95x, 3.21x, and 12.85x over CPUs, GPUs, and a prior-art eye tracking processor called CIS-GEP, respectively, while maintaining the tracking accuracy.

@inproceedings{you2022eyecod,

title={EyeCoD: eye tracking system acceleration via flatcam-based algorithm \& accelerator co-design},

author={You, Haoran and Wan, Cheng and Zhao, Yang and Yu, Zhongzhi and Fu, Yonggan and Yuan, Jiayi and Wu, Shang and Zhang, Shunyao and Zhang, Yongan and Li, Chaojian and others},

booktitle={Proceedings of the 49th Annual International Symposium on Computer Architecture},

pages={610--622},

year={2022}

}

@inproceedings{zhao2022flatcam,

title={i-FlatCam: A 253 FPS, 91.49 $\mu$J/Frame Ultra-Compact Intelligent Lensless Camera for Real-Time and Efficient Eye Tracking in VR/AR},

author={Zhao, Yang and Li, Ziyun and Fu, Yonggan and Zhang, Yongan and Li, Chaojian and Wan, Cheng and You, Haoran and Wu, Shang and Ouyang, Xu and Boominathan, Vivek and others},

booktitle={2022 IEEE Symposium on VLSI Technology and Circuits (VLSI Technology and Circuits)},

pages={108--109},

year={2022},

organization={IEEE}

}